Localization & Mapping Systems Engineer

I am part of a dynamic team that is collaboratively engaged with a prominent Original Equipment Manufacturer (OEM) to pioneer the development of an autonomous driving solution.

This ambitious project is poised to revolutionize the automotive industry by bringing higher-end automated driving solutions features to consumer vehicles.

This encompasses the design and implementation of intricate algorithms, sensor fusion systems, and intelligent control modules, all aimed at delivering a safe, reliable, and user-friendly autonomous driving experience.

Languages : AUTOSAR C++, Python, Matlab

Sensors : LiDAR, Camera, Radar, GPS, IMU

Localization & Mapping Algorithm Engineer

I was an integral member of the Localization and Mapping team, where I played a pivotal role in developing critical software components for High-Precision Localization (HPL) and MapInterface modules.

My contributions extended beyond software development as I actively engaged in rigorous in-vehicle software testing, ensuring the reliability and performance of our solutions.

Furthermore, I took pride in my involvement in the software development lifecycle, from its inception to the point of production commencement.

This encompassed a comprehensive understanding of the entire development process and the seamless integration of my work into the final product.

My tenure with the localization and mapping team was marked by a commitment to excellence and a drive to deliver innovative, high-quality software solutions that directly impacted the success of the projects I was involved in.

Languages : AUTOSAR C++, Python, Matlab

Sensors : LiDAR, Camera, Radar, GPS, IMU

Graduate Researcher

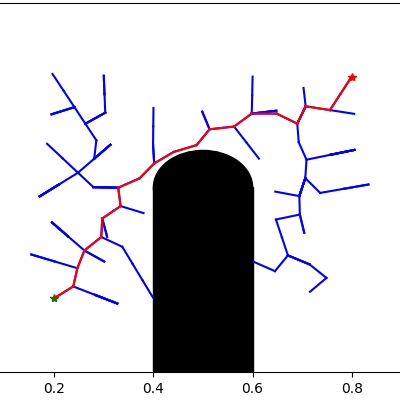

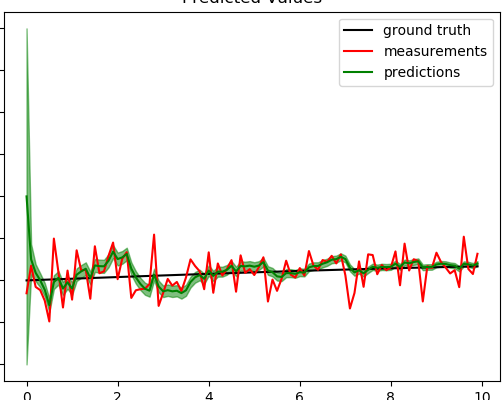

I worked with Prof. Jyotirmoy Deshmukh where we developed monitoring algorithms for data

streams that are generated by perception algorithms. The goal of the research is to incorporate safe

autonomy.

Parallel to our research, we were building USC's first autonomous delivery robot prototype from start to finish. It's a 1/10th size outdoor robot equipped with sensors

and capable of real-time perception (object detection, multi-object tracking) and mapping.

Responsibility -

-

Lead the development, build, and bring-up execution of USC's first autonomous delivery vehicle prototype from start to finish. Software stack includes object detection, visual odometry, localization, planning, and controls.

-

Accelerate deployment and testing of real-time perception, SLAM, and tracking network onto the autonomous delivery robot prototype.

-

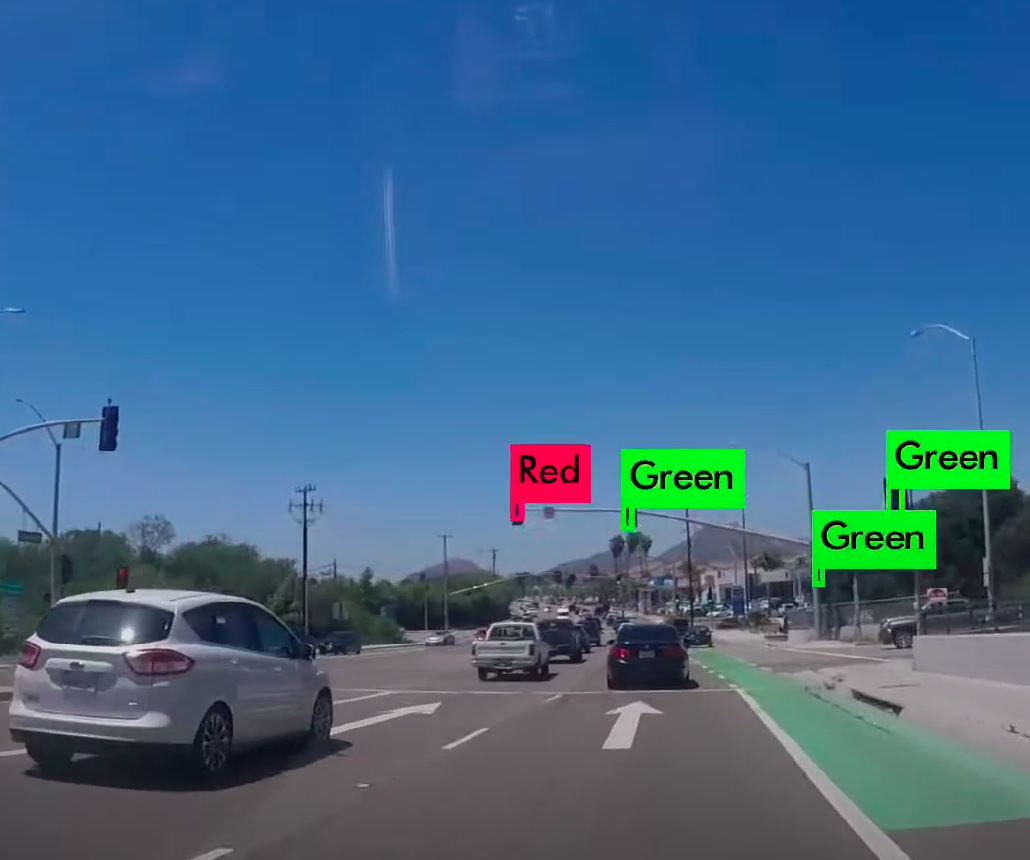

Spearhead research on developing Signal Temporal (STL) monitors, and vision-based Timed Quality Temporal (TQTL) monitors for ROS to track, and quantify perception robustness.

-

Integrate ROS multi-object tracking, lane-line detection, and semantic segmentation architectures with AV software stack.

Languages : C, C++, Python, Matlab

Sensors : Zed 3D Camera, Hokuyo LiDAR, Vecternav IMU

System on Chip : Jetson Xavier, Jetson TX2

Other : PCL, ROS, TensorFlow, Keras

Algorithms Include

-

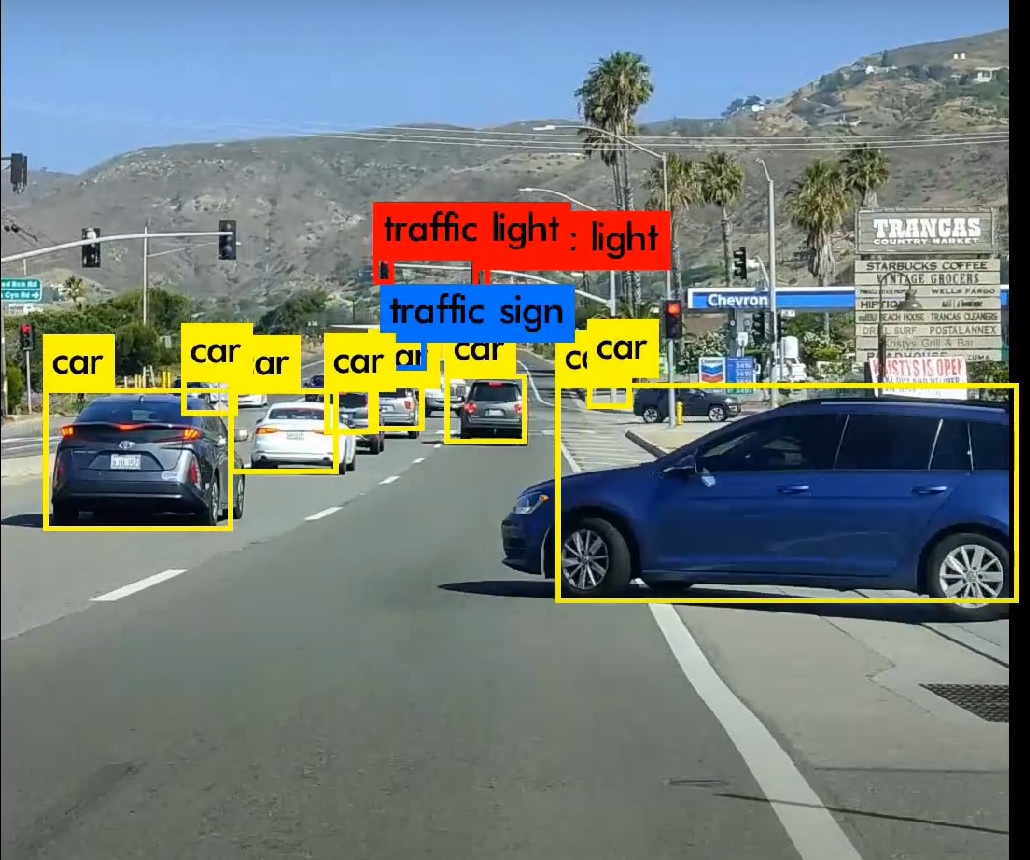

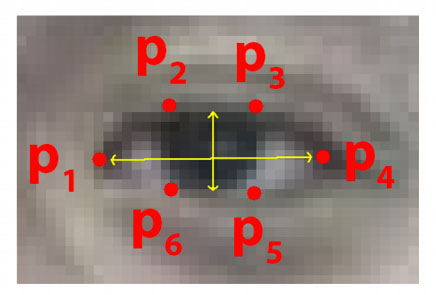

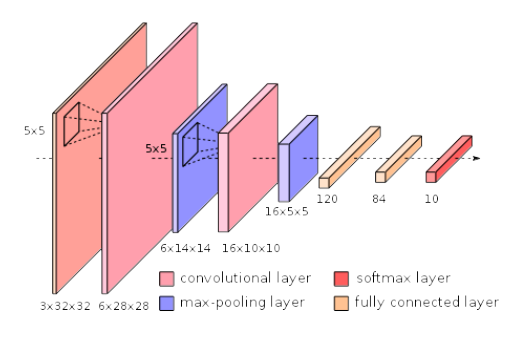

Object detection using Yolov3 capable of detecting road objects.

-

DeepSORT+ Yolov3 Deep Learning based Multi-Object Tracking in ROS.

-

Google's Cartographer SLAM

-

ROS Navigation for autonomous navigation

-

Semantic Segmentation using DeepLabV3+ (under progress)

Computer Vision Intern

Frenzy Labs, Inc is a LA based startup that develops self-labeling image technology that trains computer vision systems to detect exact

products in complex visual scenes. They scale high-quality image datasets in a fraction of the time incurred by enterprises today and reduce manual labeling workforces by 99%.

Responsibility -

-

Proposed and developed a network architecture by integrating state-of-the-art R-CNN RetinaNet object detection and

H-CNN EfficientNet classification network that improved apparel classification/detection performance by 5%.

-

Devised an end-to-end testing pipeline with RESTful request dispatching using Flask framework to accelerate model evaluation

and deployment with reproducibility and traceability.

-

Optimize a state-of-the-art backbone H-CNN and R-CNN network for apparel localization and classification from an image, smart labeling, and product search

from photograph and content. Trained dataset(~3M images) and with an increased 2% accuracy.

Languages : Python, HTML5

Libraries : Flask, Redis, CSS, TensorFlow, Keras, OpenCV

Reliance Industries Limited

Technical Manager

Responsibility -

-

Designing plant control logic on Rockwell Automation’s Programmable Logic Controllers (PLC), Schneider Electric Distributed Control System (DCS), and Emergency Shutdown System.

-

Implementing process control schemes with PID control for efficient and reliable functioning of Reactor, Cooler, and Heater.

-

Implementing design changes during plant turnaround in field devices and integrating control loop on plant Distributed Control System (DCS).